Machine Learning is a data science domain that has been rapidly developing in the recent years. In addition to the increasing range of applications, ML has also become more accessible. Several years ago, it was mainly the domain of scientists, then developers; but nowadays it is also becoming accessible for analysts. The development of Python or R programming languages support this trend. Currently, the analysts, even without the advanced knowledge of mathematics or statistics, are able to create Machine Learning models with the help of Scikit-learn packages and make financial profits from their implementation. How to use Tableau in this puzzle? I will try to explain it in the below post.

Exploring data in Tableau

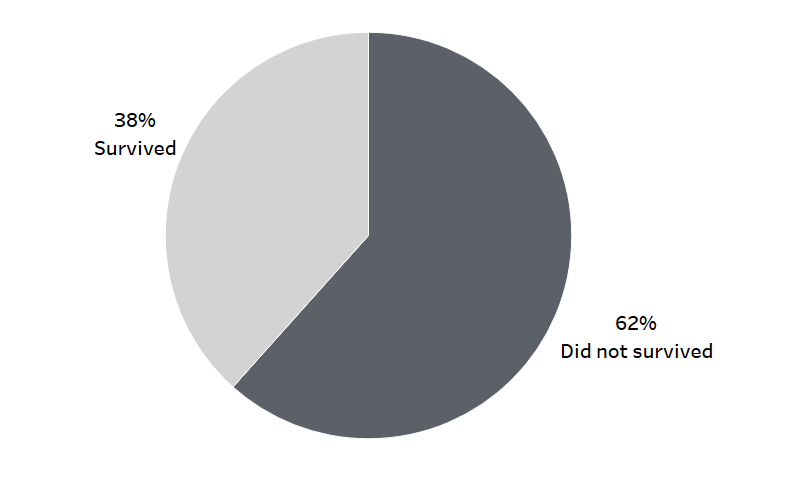

When starting any project using machine learning, the first step is to explore the data. Tableau will be helpful here, as it allows you to quickly analyze the data using the drag&drop method. In the simplest approach, you have the target variable, category (descriptive) properties and numerical properties. Before creating a ML model, first you need to understand what the data tells you and what the issue is (in other words: what question you are trying to answer). Let’s start by exploring the target variable:

You can clearly see that your dataset is quite balanced – the target variable is rather evenly distributed. A pie chart will also be suitable – when you have two categories and want to present part in the whole – it will be an excellent choice.

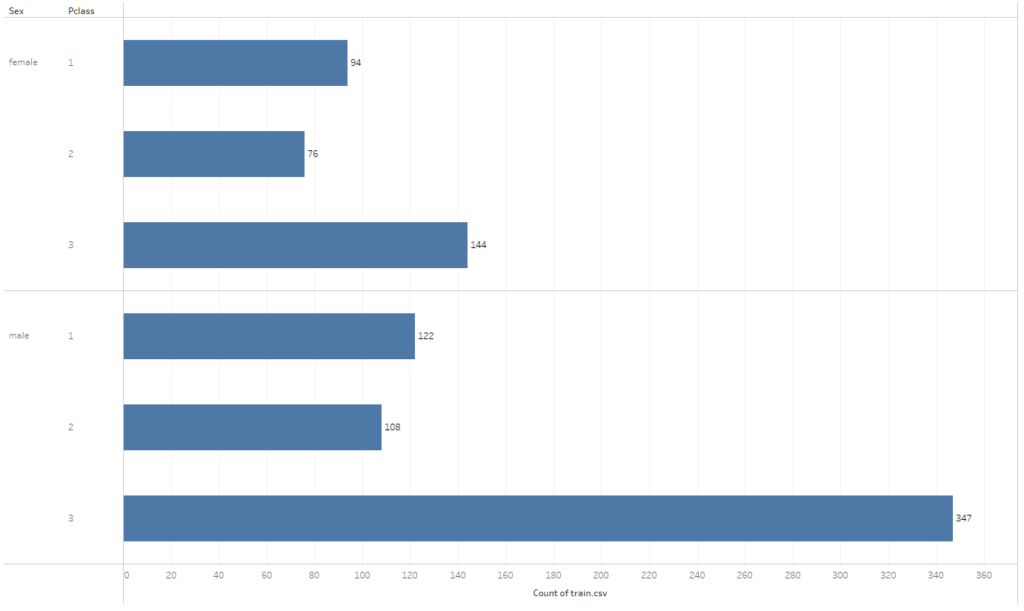

The variables are another area of exploration – both category and numerical. Bar charts are the recommended solution for categories. The below example shows the distribution of passengers according to the gender and class of travel:

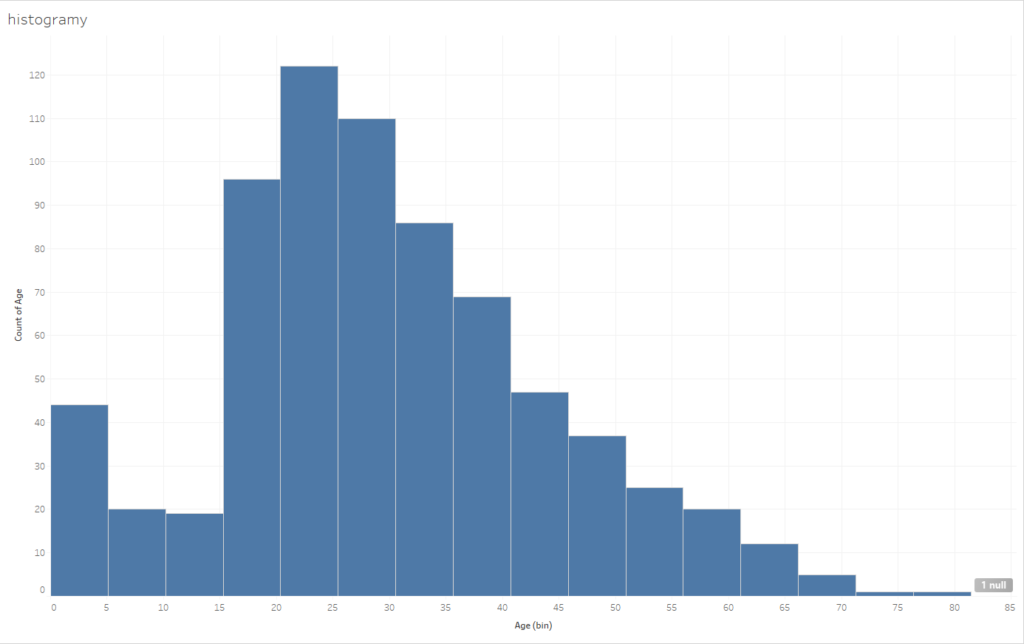

Histograms will be the best option for the numerical data. You can access this chart from Show Me – all you need is the measure that you want to analyze:

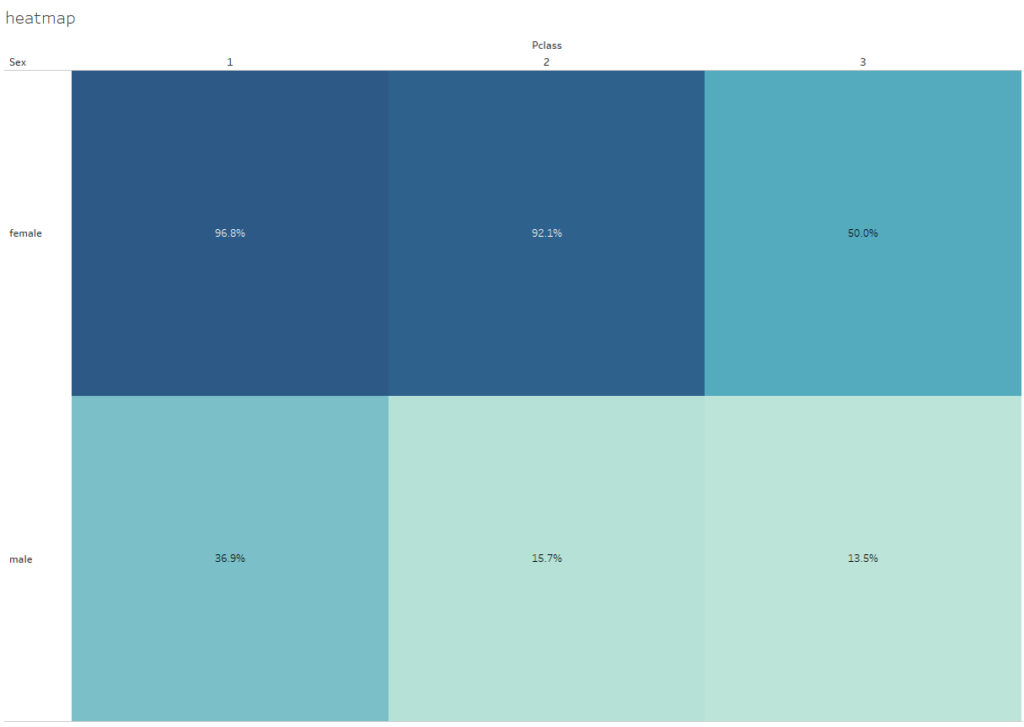

Is that all? Of course not. You can combine the variables, for example, to build a heat map:

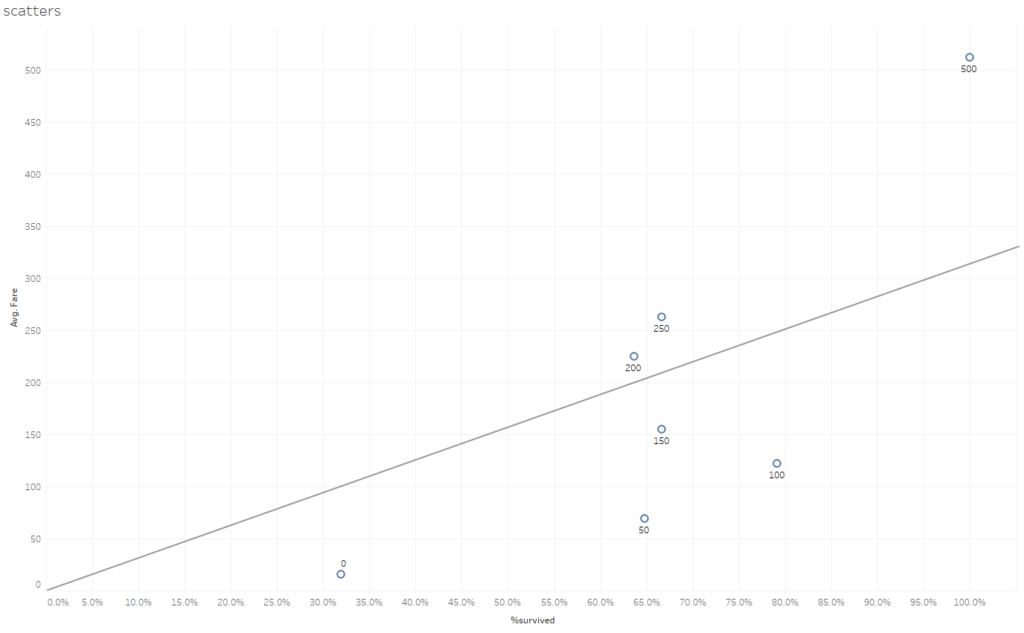

Or a scatter plot:

By using the Tableau interface, you can quickly visualize the required data. This way you are able to build the knowledge about the information that will be used to create your machine learning model. As a result, your analytical process will be shorter.

Configuring the environment to connect Tableau with Python

The theoretical aspects of building ML models are far beyond the scope of this post; however, I will demonstrate how to use a ready model – in the form of the script – directly in Tableau. To be able to use this model, you need to instal the Python environment on your computer (for example, a free Anaconda package). You will also need such packages as Pandas, Numpy and Scikit-learn. In addition, you will need the TabPy library for the integration with Tableau. The easiest way to install the packages is to do that by the commands pip install name_of_package. Once the Tabpy package is installed, you can run it by entering Tabpy in the Anaconda commands row:

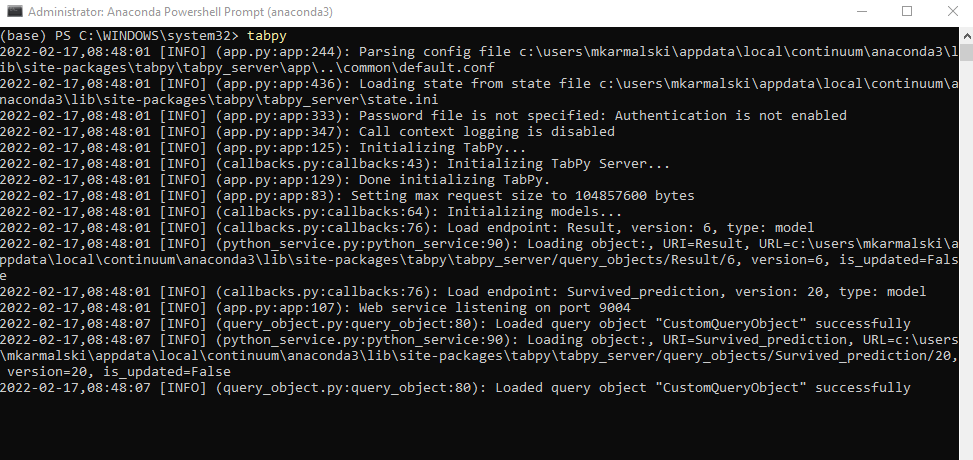

After clicking Enter, the Tabpy server will be launched on your computer (localhost):

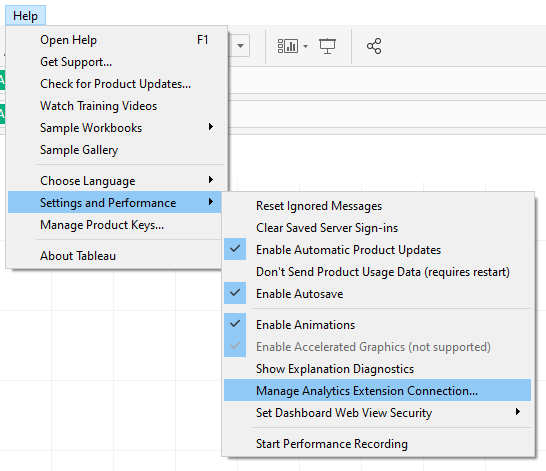

The next step is to connect to this server from Tableau. To do that, select Help > Settings and Performance > Manage Analytics Extension Connection:

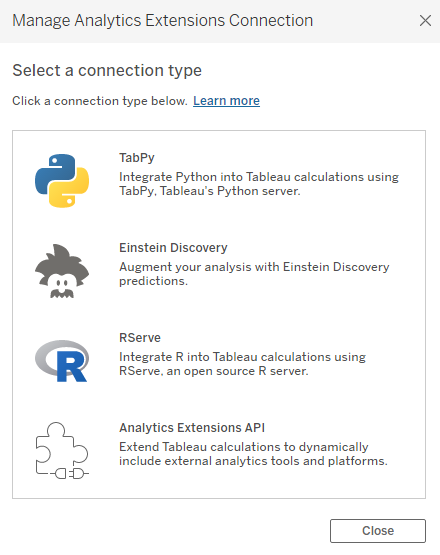

Next, a prompt will appear to select the type of connection – in addition to connecting TabPy (Python language), you can also connect RServe (R language), Einstein Discovery or another extension. Select TabPy:

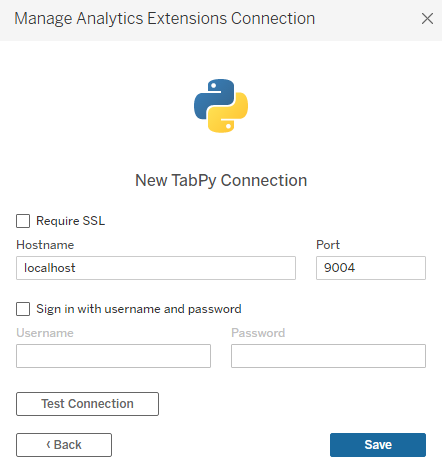

A connection configuration window will appear. If you launched TabPy on your computer, enter the localhost as Hostname and 9004 in Port. If you are using the external server to host Tabpy, you must enter the connection details here. It is also possible to configurate TabPy in Tableau Online.

If everything worked well, after clicking Test Connect, you would get the following message:

Now, you are ready to work with the model in Tableau.

Using Machine Learning module in Tableau

When your environment and Tabpy server are configured, you can start integrating the machine learning models with Tableau. There are two options of using Python scripts in Tableau:

- Insert the script directly in Tableau

- Create the model (script) in Jupyter Notebook or similar, then enter the reference to the model in Tableau.

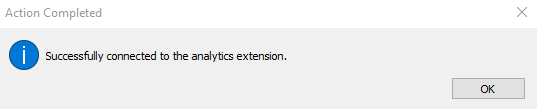

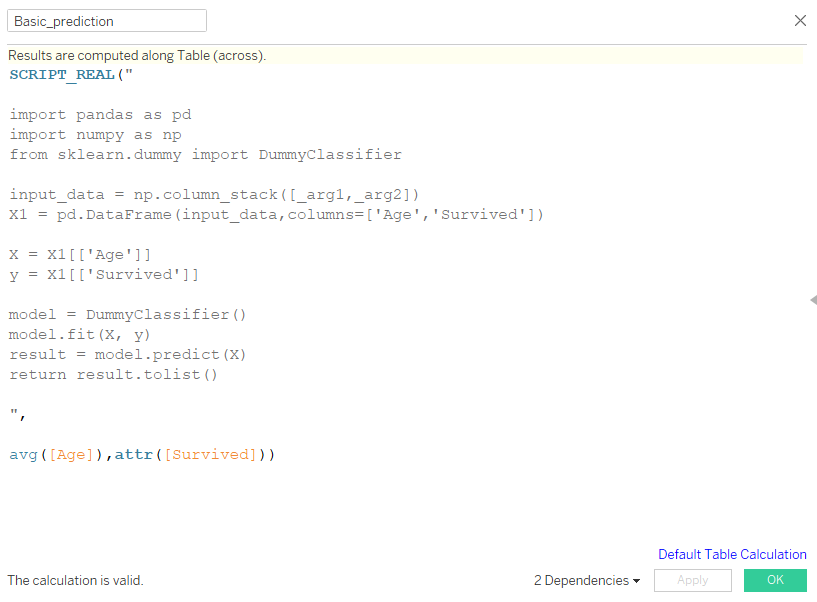

Let’s start with the first case. To insert the script, create the SCRIPT_REAL calculated field:

The script itself should be inserted between the quotes (“ “). The code is a little different that the code you would use when creating the script outside of Tableau. To understand it, let’s break down the code into elements.

- The first element is to import the libraries – this step is identical:

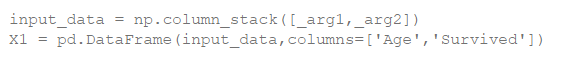

2. Next you need to load the data – this step is different. When creating a script in e.g., Jupyter, in this step you would load data from the file. In Tableau, the data is already there. All you need to do, is to provide the list of arguments, name them and create a Dataframe:

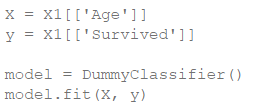

3. The next step is to create a ML model. In the below example, we are using a very simple model. The structure of the code is the same both in Tableau and Jupyter:

4. The last step is to return the prediction value – when doing this in Tableau, it’s important to return the results as a list:

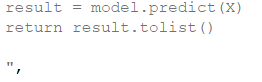

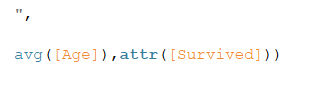

5. The final element is the second argument of the SCRIPT_REAL function, where you specify what arguments should be used by the model from the data fed into Tableau in place of the arguments provided previously (_arg1 and _arg2 from point 2).

The calculation field created in this way is an array function that returns the prediction value for the specified arguments.

Integration of more advanced models

The second technique of integrating the ML model with Tableau is to use a different environment to create the code and to connect it to Tableau. Writing the code directly in Tableau can be problematic, in particular when you need to use such tools as Jupyter. In this approach, your development is carried out in a separate environment, where you use Tableau as the access point. To do so, you need to create a machine learning model, and then use this code to create the function returning the prediction in a notebook, followed by deploying this function in the Tabpy server. Step by step:

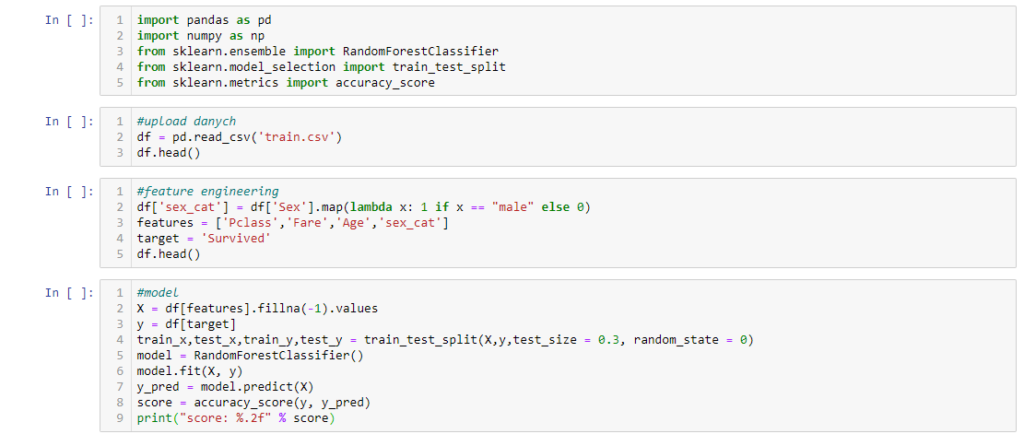

- Create the model in Jupyter Notebook:

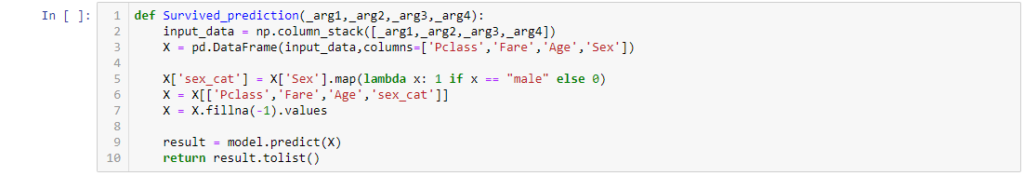

2. Create the function returning prediction from the model trained in point 1. It’s worthwhile to note that this function is similar to the code that you entered in the SCRIPT_REAL function in the previous paragraph:

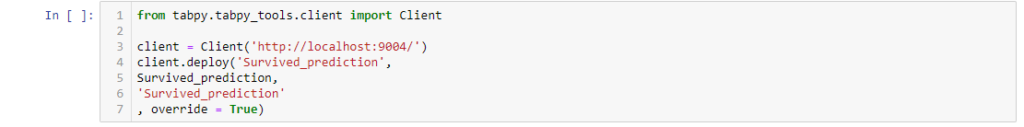

3. Deploy the function from point 2 in the Tabpy server:

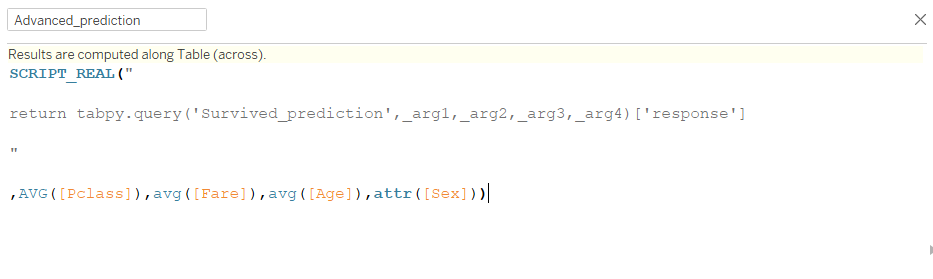

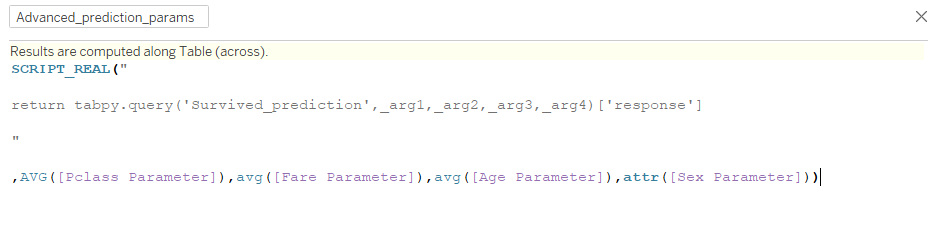

When all of the above steps are completed, you need to use the SCRIPT_REAL function to be able to use prediction in Tableau, however, its composition is different:

This way you can access prediction from the model directly in Tableau. You can use it the following way:

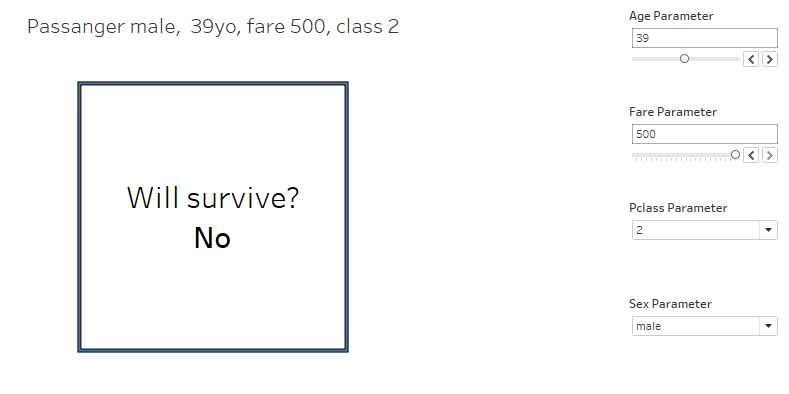

- Simulation of the prediction result depending on the variables – to do that, you can modify the Advanced_prediction function by replacing the values of arguments with the values of parameters that can be managed. This ways, by setting the relevant values, you will obtain the prediction of the target variable from the model:

As a result, you will get a simulator of the prediction result:

- Prediction for a dataset – if your model has been trained on the historical data, you can feed the actual or prognosed data into Tableau and use the model to create prediction for this data.

Tableau and Machine Learning are an excellent match

By integrating Python (or R) scripts with Tableau, you can create a particularly useful analytical tool. Tableau is excellent in visual data analysis, which helps you easily interpret the data. It also provides enhanced interactivity, making data exploration much easier than in the case of using visual libraries in Python (Plotly or Matplotlib). Moreover, by using Tabpy and Tableau Server, Tableau can be a very practical framework, in which you can share the ML models with users within your organization, thus making them more accessible.

Mateusz Karmalski, Tableau Author