Nowadays, everything, every object that surrounds us, that we use, that we want to buy leaves a trace. Trace in data form. At different stages this trace can be saved in different ways, in different databases.

In order to make a full analysis we need to extract the data we need from the source. But this is just the beginning. Different sources, different standards, different formats. Data must be consistent – homogeneous – to give a clear and true message. Every company wants to learn other things from their data.

That is why it is so important to prepare this data according to the needs, requirements and standards of each client individually. It is also a stage that transforms data so that it is compatible and can be analyzed as such. Our extracted and transformed data must be loaded (pulled in, uploaded) into the target database, which will be the basis of our analysis.

What possibilities does the ETL tool give us?

The list of possible applications of the ETL tool goes beyond these four most characteristic points, but clearly shows how important it is to use ETL in everyday business operations. It is enough to imagine how hard and laborious it would be to work with data if we had to be an expert on every data source that is processed in our company. If every processing, search, purification had to be done individually. Every day. How much work, resources and time is wasted on such an action – and is it worth it? Especially when there are tools available to use these expenses much more efficiently and… effectively.

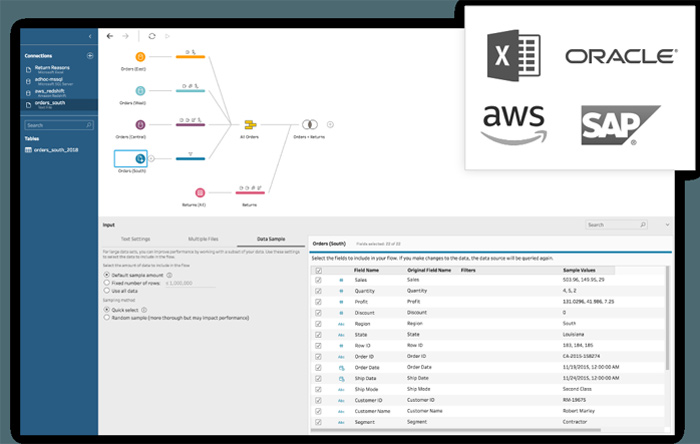

Tableau Prep interface Extraction The extraction process may seem to be the easiest of the data preparation steps – but it is not. At this stage, there are many problems with access to data, with the compatibility of source and target data formats. For data that are easily accessible, these procedures will of course be simple and for data that are not directly accessible, they will be correspondingly more complicated.

When we deal with different types of data, they are usually organized into a structure. It is important that the extraction procedures already introduce pre-selection of data, because extraction of redundant or insignificant data adversely affects the efficiency of the ETL process and the data warehouse being created.

Transformation

During the transformation process, we check whether the records from the databases are correct, need to be cleaned or are unusable. This helps to maintain order in the created base and does not lead to its littering.

The simplest of data conversion procedures is responsible for converting numerical data into characters and vice versa, filling in empty values, changing formats (numbers and dates), changing values e.g. converting units. The transformation processes also allow us to unify values and maintain the integrity of the data being prepared.

Before the data can reach the data warehouse, it must be cleaned, integrated and mapped:

- Detection and, if possible, correction of spelling and dictionary errors, e.g. an error in the name of the city, the full list of which we have in the database,

- detection of correct formats, e.g. correctness of telephone numbers in terms of number of digits,

- detecting and removing inconsistencies between the name of an attribute and its content – for example, it often happens that there is a customer’s first and last name in the ‘Company name’ field,

- value normalization – replacement of blank character strings and spaces with NULL,

- completion of data based on external information sources, e.g. completion of missing postal codes based on addresses.

Data Loading

Loading is the last and most important process in the ETL system. It consists of placing data that have been previously extracted and transformed in a data warehouse.

Use of ETL and impact on business

The data – as well as their availability and real-time analysis – have become the basis of modern business. How you collect, process, combine, store, visualize and analyze your data from a business perspective is now more important than ever before.

Whether you’re looking for data from databases, streaming services, files or other sources, choosing the right toolkit is crucial. A modern ETL solution, designed and built for today’s business environment where data is processed in real time, may be the best solution for your business.

The choice of the ETL tool is, contrary to appearances, one of the most important steps when it comes to data analysis. The way they are prepared, processed and their final shape and time for these processes will be devoted to the basis of our analyses. Always. It will be at the heart of our data retrieval, processing and delivery system. Therefore, the versatility of the ETL tool should be one of the key criteria for choosing a specific solution.

How to select an ETL tool?

We can distinguish 5 areas to be taken into account.

- Connecting to data: the ETL tool should be able to communicate with any data source, regardless of its origin (databases, streaming services, files)

- Efficiency: transferring and modifying data requires adequate computing power, so an ETL tool should be able to cope with the increasing size of our data in the future.

- Transformation flexibility: matching, merging and changing data is crucial for transforming it – most of these operations should be able to be performed with simple drag and drop operations.

- Data quality: the data we start working with is not cleaned and structured. The information contained in our data will only reveal its secrets to us if it is homogenous and ordered.

- Flexible data collection options: an ETL tool should give you the possibility to determine whether to refresh data at intervals, or to refresh only the latest data, the whole database, or maybe only a specific part of it.

There are many ready-made ETL solutions on the market, such as: SAS ETL Studio, Alteryx, Oracle Warehouse Builder, Pentaho Data Integration. They all provide a wide range of functionality and connectivity to a variety of data sources. Their implementation is usually not problematic, but purchase costs can already be a hard barrier to overcome for companies without a sufficiently large budget for such expenses.

This makes the use of Tableau Prep all the more interesting – especially if we think about using Tableau technology in our “main” Business Intelligence. Tableau Prep is “embedded” in the license of Tableau Creator and therefore does not entail additional costs. It has very interesting possibilities to analyze the metadata itself (i.e. data about the data), as well as various transformations, groupings, aggregations, cleansing, etc. of the data itself – even before it is “let in” to Tableau. In a simple, graphical way you can design the ETL process itself, as well as check the results of specific data transformations in real time. At the “output” we get a ready-made Tableau extract, or csv file.

Importantly, a once defined process (so called Tableau Flow, .tfl) can later be started according to a defined scheduler, or on request.

Why use ETL?

The history of ETL processes is almost 50 years old and since then companies have relied on ETL for a consolidated view of their data. With ETL, organizations can analyze their data in multiple locations and formats and make faster and better business decisions based on it.